So I’m going to start from the ground up, in-order to build an object that is intelligent what are the basic requirements I need to achieve? we will call these the artificial intelligence basics. Looking at the concept of artificial intelligence, there are many confusions, so for clarity let’s divide what I want to build into 2 broad categories:

- The practical / real life scenario. We’ll call this “Artificial Intelligence Basics – Lite“

- The idealistic scenario. We’ll call this “Artificial Intelligence Basics – Ultimate“

My curiosity and spirit of discovery wants to achieve the second scenario, by which I mean a complete artificial intelligence that can have it’s own internal (mental) existence. There are questions as to what that might entail from a moral perspective or from an existential standpoint etc., however I leave that discussion for a later post in this series. The first scenario is what we are actually building in the world today or may build from a practical standpoint for example what Google, Microsoft, Facebook etc. are working on. Let’s consider each of these now:

Artificial Intelligence Basics – Lite :

What Google, Microsoft and Facebook are doing in the field of artificial intelligence is remarkable, however as the field advances it becomes more and more complicated. Now if you do a search for artificial intelligence or machine learning projects that big companies are working on there is a barrage of sophisticated terminologies to face such as deepLearning, deepFace, deepText, neural networks, embodied agents, symbolic and sub-symbolic AI, brain simulation and cybernetics etc. To simplify things, we are going to stick to the basics of artificial intelligence in this article. There is no hard and fast rule but I’m going to try and summarize the 5 basics of this Lite version first:

1. Information Processing / Problem Solving

As discussed in my post the Concept of Artificial Intelligence, this is kind of the core of what computers can do. In humans, this skill is part of the executive functions that is accomplished (theoretically) in the dorsolateral prefrontal cortex and parietal cortex of the human brain. In computers this is done by the use of logic circuits, to do mathematical calculations in binary. The CPU computes these calculations. The RAM helps by storing a piece of information temporarily, while the CPU is processing other parts of the calculation, much like working memory (another executive function in humans). The ROM or hard drive is where information is stored for long periods until edited much like long-term memory in humans (which is not an executive function).

So every computer in a way is artificially intelligent in the sense that it can do human like processing and problem solving of well defined mathematical issues. An ability to convert practical issues into a mathematical one can enhance this one step further. This is where the next three ideas come in i.e. recognition, understanding and communication.

2. Recognition

Recognition works on many levels. Let’s take an example: I want my virtual assistant to find me a shop, with good ratings, that is near me and sells wheelchairs. To make it worse I don’t want to type this in, I rather want to talk to it like a person. How will my virtual assistant be able to solve my problem ? Being able to recognise what my spoken words are is the first step, this is where the speech recognition technology is progressing (Apple and Google are at the forefront of this).

Now just being able to capture the sound wave patterns and relate them to a database of previously capture sound wave patterns isn’t enough. Yes by doing this the software can recognise what each word probably is but that doesn’t mean anything on it’s own. It just relates one sound pattern to other ones which seem similar hence the only thing gained is a differentiation from other patterns (a classification).

3. Understanding (?Comprehension)

This is one of the entrances to the rabbit hole of AI research. Up till the previous step the assistant is only dealing with a set of differentiated information i.e. ‘shop’, ‘good’, ‘near’, ‘me’, ‘wheelchairs’ are all separate things but other than that there is no sense to them. So how can we add meaning to this and start making some sense ?

One approach can be to use external references and databases. For example ‘shop’ could automatically link to all items tagged shop in google map’s database, similarly ‘wheelchair’ could link to all data tagged wheelchair. ‘Good’ can have a value of say 7 on a 0-10 scale and a database of user ratings can act as a reference to compare this against. ‘Me’ can automatically refer to the speaking object itself. Near can have an arbitrary value of say 0.7 miles and link into a geo-positioning database.

Think of it as a filtering mechanism, to start with the assistant has billions upon billions of options, now after recognising the words the filters start to be applied. Firstly ‘me’ automatically gives the geo-position of the speaker, then ‘near’ automatically gives a radius of all items on a database (or even multiple databases e.g. one from Google another from Bing). Then ‘shop’ filters the results to leave the items in the shop category only ( it can also use related words like market, store, bazar, plaza, outlet etc. if programmed to do so). Now ‘wheelchair’ filters these even further so that only the shops/market/stores with wheelchairs as an item remain. ‘Good’ narrows this down a bit more because only shops above a certain rating remain now.

This is a very simplistic version of what might be possible of-course. I would be cautious in using the word ‘comprehension’ here because this example was a straight forward query, what would happen with a more abstract interaction or without an already available database. There is no comprehension going on here of the subject matter, rather a complex automated response. However other models do attempt at this by modelling symbolic artificial intelligence systems and contextual learning systems.

4. Communication

This is more easier to grasp, in the above example the virtual assistant has been able to come up with an answer. Now it has to convey that information to me. A simple way can be to use a generic sentence derived from the words in the original question plus the answer. Something like “A good shop selling wheelchairs near ‘you’ is ‘X'” (all the words are same except changing me to you and adding in the shop’s name). Synonyms and other contextually learned sentences would improve this further and make it more realistic.

The other aspect of communication is developing programmes which feel natural in how they speak, this area seems to have made great progress over the last couple of years.

5. Learning

This one is another tricky one to grasp at first, how can machines learn ?

This one is another tricky one to grasp at first, how can machines learn ?

It’s easier to understand if you look at the recognition example above, by classifying similar sounding sounds together the software is in essence creating a database. If that database is programmed to change slightly whenever a new similar sounding sound enters (meaning another sample is available to use as a reference), learning is in effect taking place.

Another way learning may be effective is by recording the sequence in which words are in normal use. By this an artificial intelligence can continue to learn the normal structural relationship between words, so it is less likely to make errors.

Experts use the word ‘Deep’ learning in general when the system can correct itself and in turn make itself better. For example in the sound recognition example above, the system was using it’s original database to compare the new sounds to. If it makes the right guess then the algorithm was right and reinforces itself. However if it makes a mistake it can analyse the word again, to tweak it’s own code, so that next time it wouldn’t make the same mistake. This may be done by giving more importance to a certain signature which it was previously giving lesser weight.

Another thing is to look at the context and learn from that. For example lets combine the above two examples of recognising words and learning about sentence structure. If the intelligence recognises a word which doesn’t fit the usual context (which it is learning from the sentence structure data), then it can identify the most likely error. Now it can try to match that wrongly identified audio pattern to other ones down the list of likely matches sequentially and see which one best fits with the context. This gives it a better chance of matching the right word. If done successfully it can incorporate this information into it’s original algorithm.

Artificial Intelligence Basics – Ultimate :

Above we looked at where we are and some aspects of where our synthetically intelligent systems are progressing. Now let’s look at what our aspiration (wrongfully or rightfully) for an ultimate artificial intelligence are. What artificial intelligence basics would lead to the development of such an existence. Here I’m briefly going to consider 5 characteristics which I feel would be needed or would be adding something valuable:

1. Language

By this I mean use of symbolic systems and metaphors. The ability for these to evolve further as happens in human language.

2. Imagination

This does not mean the ability to plan, as that can be done by learning as well. An ultimate artificial intelligence should be able to have an inner mental world where it can combine abstract concepts.

3. Creativity

Following on from imagination, another ultimate artificial intelligence basic should be the ability to create unique ideas either from combing abstract concept or by an unconscious process or by inspiration.

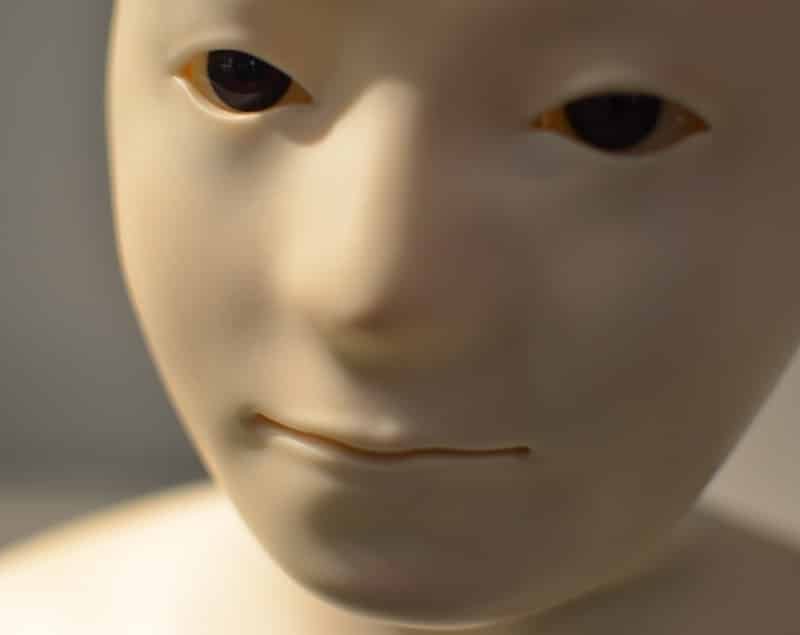

4. Consciousness

There are many descriptions of what consciousness is, and it can get a little confusing. To keep it simple let’s just think of consciousness as what all of us intuitively know it to be. The ability to know that we know and that we exist.

5. Phenomenological experience

This ties into consciousness as well. However what I want to stress is an ability to have feelings and emotions. I feel this is perhaps the most difficult thing to comprehend. How might an artificial intelligence being be able to have feelings and emotions such as pain, pleasure, sadness, grief and happiness etc. ? These appear to be unique and indivisible qualities.

To conclude

I feel the artificial intelligence basics that we discussed for the Lite version will lead us probably to being able to cover the language part of the ultimate version. There could be some debate about imagination, creativity and even consciousness. As far as feelings go I don’t think there is any doubt that we aren’t making any progress in that domain.

The thing is we don’t understand these phenomena very well, we are more used to experiencing them. One has to question whether such things are in fact open to scientific analysis at all.

The artificial intelligence basics stated in this article are my personal opinions and insights. I formulated them intuitively and by doing a little research. If you feel there are some pertinent artificial intelligence basics that I missed or if you have any other comments please use the comment section below.